29.07.2025

Practice Areas: Intellectual Property and Information Technology

Services: Data Protection and Cybersecurity

Safer Digital Services for Children

On 14 July 2025, the European Commission published the final text of its Guidelines on appropriate and proportionate measures to ensure a high level of privacy, safety and security for minors online.

These Guidelines, although not binding per se, materialise the obligations laid down in Article 28 of the Digital Services Act – Regulation (EU) 2022/2065 of 19 October 2022 – and aim to guide Digital Services Coordinators (in Portugal, ANACOM) and other competent authorities (alongside ANACOM, the Portuguese Regulatory Authority for the Media and the Inspectorate-General for Cultural Activities) in the application of this article.

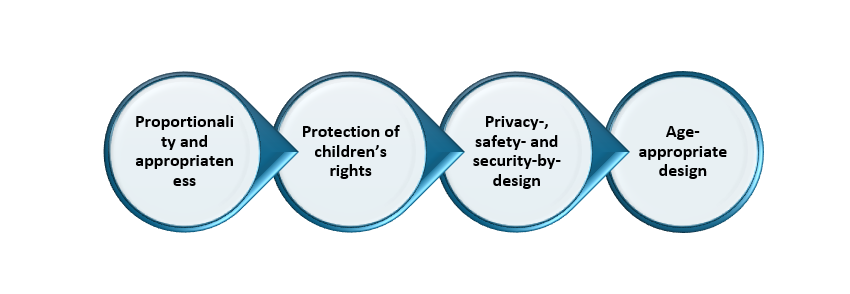

Online platforms that are accessible to minors should be configured in a way that respects the following general principles:

The Commission puts forward recommendations to enable market operators to comply with these general principles, and to provide a high level of privacy, safety and security for minors. Firstly, it is recommended that a risk review be carried out. The result of this analysis is intended to guide the provider in choosing the methods and technologies that influence the design of the digital service.

On another point, the Commission considers that measures restricting access to the platform on the basis of age, and in particular age verification methods, are an effective means of guaranteeing a high level of privacy, security and safety for minors. However, it warns that age verification does not justify retaining more of the user’s personal data than is necessary for determining their age.

On this point, the Commission points out that the availability of the EU Digital Identity Wallet will allow easy verification of users’ age. Until its implementation, online platform providers will be able to adopt the verification solution currently being developed by the European Union.

In addition, the principle of privacy, safety and security by design, together with the principle of age-appropriate design, may be complied with through the adoption of techniques such as recommender systems that take into account the specific needs and characteristics of minors.

Moderation can also reduce minors’ exposure to harmful content and behaviour. As such, providers of online platforms are recommended to develop moderation policies and procedures that define how to deal with content and behaviour that is harmful to the privacy, safety and security of minors. However, these recommendations do not translate into a general obligation to monitor.

The implementation of user support measures to help children navigate their services, and specific tools for guardians, are described as effective ways of guaranteeing a high level of protection for minors.

Finally, the Commission recommends that providers of online platforms accessible to minors have a robust platform governance in place to ensure fair commercial practices.

The publication of these guidelines calls for a review of commercial practices and the terms and conditions of use of digital platforms that are accessible to children.